Topic

This year's project will focus on web caching. A web cache is what makes your browser fast (or not, if it fails) by holding copies of popular websites in your browser cache, on a computer close to you (aka. a content delivery network), and in large server farms (aka. web accelerators).

At the heart of browser performance lies the problem of selecting which web objects you'd like to have copies of, which is known as a caching policy. This project will center on the performance evaluation of various caching policies. We will experiment with various caching policies and evaluate their performance on request traces of one of the most popular websites in the world.

We will learn about basic concepts of performance evaluation, implementation, and surveying the academic literature.

Instructors

M.Sc. Daniel Berger

M.Sc. Matthias Schäfer

Follow #PEDSproject and @DISCO_Teaching.

Schedule and Slides

Here is a Google calendar with the tentative schedule.

| Class time | Title | Link |

|---|---|---|

| 28.11.2016 5pm | Introduction and overview (case study: delivery Wikipedia's content) | |

| 07.12.2016 1.30pm | I. Basic analysis (popularity distributions, CDFs, size distributions, etc) | |

| 14.12.2016 5.15pm | II. Statistics and simulation (hit ratios) | |

| 11.01.2017 5.30pm | III. More statistics and advanced simulation (classical caching policies) | |

| 18.01.2017 5.15pm | IV: Better than classical caching policies (the Filter policy) | |

| 25.01.2017 5.15pm | Q&A and general discussion | - |

| 01.02.2017 5.15pm | V: Theory meets practice (bloom filters) | upcoming |

Make your own slides by using the latex/ppt templates or by cloning (File->Make a copy) of this Google slide set.

Student Groups

Group A:

| Ali Taha |

| Anusha Halsnad |

| Manish Kumar |

Group B:

| Oskar Frohn |

| Karla Schäfer |

| Ewa Krajnik |

| Selin Dursun |

Group C:

| Bindu Nagendra |

| Sriharsha Navile Basavaraju |

Anonymized Real-World Trace Data

We will use traces of requests to a Wikipedia cache server. The trace format is:

seq# hashid size

where seq# is a consecutive request counter (per day), hashid is a long integer hash of the accessed page, and the size measures in bytes the size of the requested object.

(Download available from inside the university network. These traces fit the request fornat of the webcachesim open cache simulator. )

Example C++ code to parse such a trace file. This does very little, only outputs the contents of each line of the trace.

#include <fstream>

#include <iostream>

using namespace std;

int main (int argc, char* argv[])

{

const string traceFileName = argv[1];

long time, hashid, size;

ifstream infile;

infile.open(traceFileName);

while (infile >> time >> hashid >> size)

cout << "time: " << time << " hashid: " << hashid << " size " << size << endl;

infile.close();

return 0;

}

Task I (due Dec 7)

- based on one day ("Monday") of wikipedia requests from here (only accessible from within the university network)

- your results:

- Most Popular object : hashed ID 330950210 with 2400065 requests in a single day

- Total number of requests: 198075867

- Total number of objects: 10549934

- Mean object size : 7010 Byes

- Minimum object size: 10 Bytes

- Maximum object size: 707143844 Bytes

- Object Hit ratio of caching 10GB most frequently ued : 0.745589

- Group a: slides and their github repository.

- Group b: n/a

- Group c: slides and their github repository.

Task II (due Dec 14)

- based on seven days of wikipedia requests from here (only accessible from within the university network)

- A) data analysis tasks:

- Difference between daily 100 most-popular objects: the idea is to see how many of the very popular objects of day 1 are still among the very object of day 2. Formally speaking: let X denote the set of the 100 most popular objects of day 1; and let Y denote the set of the 100 most popular objects of day 2. Calculate the number of objects in the intersection of X with Y, and repeat this for day 2 and 3, 3 and 4, etc.

- Solution: 90, 93, 94, 98, 94, 91

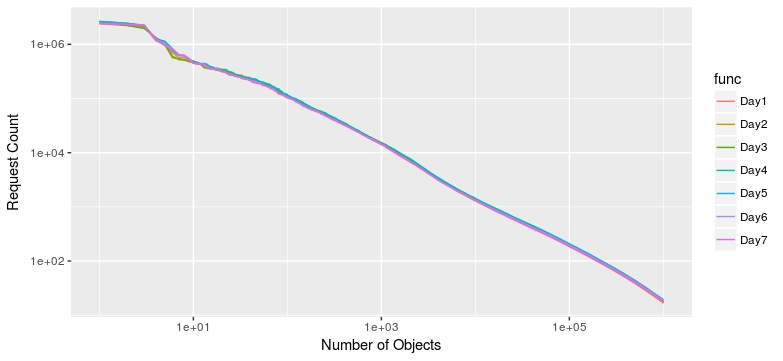

- Popularity Plot: request count of 1st-most-popular, 10th-most-popular, 100th-most-popular, 1000th-most-popular object etc. (consider putting both x-axis and y-axis on a log scale). Create a plot which has this data (as a line) for each day.

- Object size histogram. Object size CDF. Create the CDF as a single plot with separate data (lines) for each day.

- a good example of the popularity plot was given by group c

- B) simulation task: simulate LRU on both a 1-day-long trace and the 7-consecutive-days trace with 10 GB capacity.

- This should be easy, if you reuse the webcachesim open cache simulator

- Downloading, compiling, and running the simulator should be as easy as:

git clone This email address is being protected from spambots. You need JavaScript enabled to view it.:dasebe/webcachesim.git cd webcachesim cmake CMakeLists.txt make ./webcachesim cache.07.01.tr 0 LRU 33.3219 - As an alternative you can directly import from github into CLion IDE (first fork webcachesim into your git account).

- Solution: object hit ratio for all days around 70% and byte hit ratio around 30%

Task III (due Jan 11)

- A) Work with all 7 days worth of traces. Simulator should run through them consecutively.

- Simulate the Cache Policies: LRU, FIFO, GDSF (CS-students: LFU-DA, GDS, S2LRU)

- with Cache capacities: 100GB (CS-students: 1-1000GB)

- Measure both Object and Byte Hit ratio

- (CS-students: Measure throughput: how many requests per second is each policy able to process? )

- B) Literature research

- Find 10 classic/modern caching policies (besides FIFO and LRU) in the literature.

- Add these policies to the following table:

Policy

Name

# Operations per Hit

# Operations per Miss

Memory

Overhead (besides the cached object)

LRU

O(1)

O(1)

(List entry + unordered hash map entry) for each cached object

FIFO

none

O(1)

(List entry + unordered hash map entry) for each cached object

Task IV (due Jan 18)

- Literature on the "one-hit-wonder" problem in Internet content delivery.

- Evaluate a simple implementation of the FILTER cache policy (based on a hash map, e.g., the std::unordered_map)

- OHR/BHR

- throughput

- memory overhead

Task V (due Feb 1)

- Read the literature on "bloom filters". Note that the Wikipedia entry talks mostly about checking whether something has been requested once or never, whereas it is necessary to count for our purposes (i.e., need an integer array instead of a bool array). This paper details the idea of building many hash functions from just two hash functions.

- Familiarize yourself with 128bit-Murmurhash3. Split the 128bit hash into two 64 bit hashes (e.g., access the 128bit hash as an array of 64bit values) and use them to derive them any number of hash functions.

- Implement a simple bloom filter (about 50LOC). Note that realistic values for the Filter policies' N parameter only need 8-10 bits, so an array of uint16 leaves plenty room. Be wary of overruns, however, some objects will get many requests (why not stop incrementing your bloom filter, once we cross the N threshold of the filter policy?)

- Change Filter-LRU and/or Filter-FIFO to use your bloom filter instead of the std map.

- Evaluate the hit ratio, throughput, memory overhead for different variants of the new and old Filter policies

- start with an array size of l=1MB and a number of k=8 hash functions (the slides denote the number of hash functions n, but that collides with the Filter's N, so let's call this k).

- also experiment with l=512KB, 2MB, 4MB, 8MB and k=4,16,32

Final Report Guidelines

- 4 pages of text per person, not counting space taken up by figures (i.e., 2 pages each half text/ half plot count as one page of text).

- Figures and plots need to be legible, clear, with proper axis labels and legends.

- Each figure should have a descriptive caption, and should be referenced and discussed in the text.

- Correctly reference literature and sources, section at end of paper which lists all references.

- We suggest you use Latex as it simplifies referencing and figure placement (use this template (DiscoReport.zip) ).

- Content (brackets indicate percentage of overall text length that we suggest for this section):

- Intro and Problem Statement (10%)

- How does the Wikipedia use caching and why?

- What is the optimal caching policy for the Wikipedia?

- Description of traces, research questions, reference metrics in Section 4

- Literature Research (10%)

- Table of Classical Caching Policies

- References, short discussion of policies

- Statistics For A Week of Wikipedia Requests (10%)

- Popularity distribution line-graph (Zipf's law)

- Popularity over time line-graph (differences between days)

- CDF plot of object sizes, min, mean, max object size

- Cumulative count of one-hit wonders line-graph

- Experimental Setup (10%)

- Metrics to Evaluate CDN Performance

- Object hit ratio (OHR), Byte hit ratio (BHR), throughput, memory overhead

- Define each. Why is each relevant?

- Simulator (how does it work, source)

- Experiment hardware, OS, etc.

- Metrics to Evaluate CDN Performance

- Performance Evaluation of Classical Caching Policies (20%)

- For 10GB cache, BHR and OHR bar-plots for

- LFU (caching the most frequently used objects)

- LRU, FIFO, GDSF, LFU-DA, GDS, S2LRU

- For 10GB cache, throughput box-plot, memory overhead bar-plot

- LRU, FIFO, GDSF, LFU-DA, GDS, S2LRU

- Discussion

- Trade-offs when choosing a classical caching policy

- Plots for 1-1000GB

- Which policy would you choose if you had 1-50GB vs 50-500GB vs 500-1000GB?

- For 10GB cache, BHR and OHR bar-plots for

- Maximizing the BHR with Cache Filters (30%)

- "Simple" Filter 10GB: BHR/OHR bar-plots, throughput box-plot, memory-overhead

- Discussion of "Simple" Filter:

- Parameter N, picking N*

- Comparison to classical policies, +/-

- How to build a low-overhead high-throughput Filter: Bloom-Filter idea.

- Parameters of Bloom-Filter: which ones would you chose?

- Comparison to classical policies and "Simple" Filter

- "Simple" Filter 10GB: BHR/OHR bar-plots, throughput box-plot, memory-overhead

- Conclusions and Future Work (10%)

- What is missing to use your filter in practice? How can you still improve it?

- Alternative ideas to boost the OHR or BHR that you can think of

- References

General Reading References

- How to Collaborate On GitHub

- GitHub Help: Collaborating with issues and pull requests

- 7 Basic rules of data plotting

- RStudio Tutorial

- R is the most popular software for statistical data analysis

- R-blogger's Basic Introduction to ggplot2

- CLion C++ IDE: Quick Start Guide

- JetBrains: Free for Students (including CLion IDE)

We offer a variety of bachelor and master

We offer a variety of bachelor and master